Executive summary

Polygon Proof of Stake went through several hours of slow finality that disrupted checkpoint emission and state sync. Core teams shipped new Bor and Heimdall builds and coordinated a controlled hard fork on mainnet. Finality returned to a steady rhythm, checkpoints resumed on a predictable schedule, and fresh nodes began syncing without stalls. A companion upgrade named Rio is scheduled on the Amoy testnet so client maintainers can validate configuration edges and keep environments aligned.

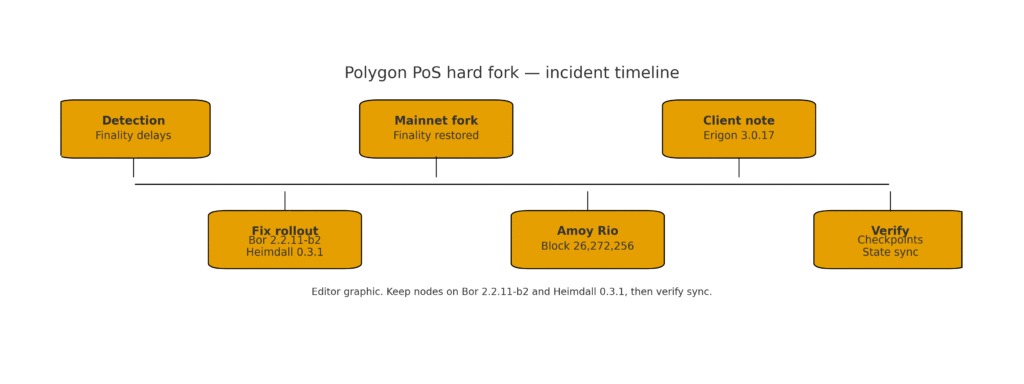

Incident timeline

Detection

Validators and infrastructure providers began to notice longer intervals between produced blocks and confirmed milestones. Receipt queries took longer. State sync on freshly provisioned nodes advanced unevenly.

Fix rollout

Engineering teams published Bor version two point two point eleven beta two together with Heimdall version zero point three point one. Rollouts started on canary sentries and a small validator set while observability panels watched peer counts, gossip, and proof handling.

Mainnet fork

With enough validators upgraded, the network executed a hard fork that stabilized consensus. Shortly after activation, the ratio of produced blocks to finalized blocks converged to the historical baseline. Checkpoint spacing normalized and RPC error rates fell.

Amoy Rio

To mirror the mainnet fix and practice the runbook, Rio is scheduled on Amoy at a defined block height. Testnet activation lets operators verify behavior across more node types and flags any corner cases before future production maintenance windows.

Client note

Data access environments that rely on Erigon received guidance about versions and read paths. Operators who run mixed stacks were reminded to align component versions and rehearse snapshot based recovery in case resyncs are needed.

Verify

Once the network looked healthy, teams still performed post incident checks. The focus remained on checkpoint cadence, state sync progress from a clean snapshot, and receipt reliability during peak demand.

Figure 1. Incident timeline for Polygon PoS showing detection

Node operator checklist

Before maintenance

Create fresh backups for validator keys and the Heimdall database.

Export your dashboards and alerts for finality lag, checkpoint spacing, peer count and RPC latency.

Notify downstream teams about the restart window so traffic can be throttled if needed.

Upgrade steps

Update Bor to version two point two point eleven beta two.

Update Heimdall to version zero point three point one.

Restart sentry nodes first and watch peer stability. Then proceed with validators in waves.

If your data layer uses Erigon, read the current advisory and pin to the recommended release before returning full traffic.

Health checks after restart

Confirm Bor shows healthy peer counts and that block height matches your reference.

Check that Heimdall resumes span syncing and begins broadcasting checkpoints on schedule.

Run receipt and log queries at moderate load. Record percentile latencies so you can compare with your pre incident baseline.

Start a fresh state sync from a recent snapshot and measure time to full catch up.

Recovery playbook

If a node stalls, clear pending queues and rebuild the Heimdall database from a trusted snapshot.

If RPC answers look inconsistent, route reads to a clean endpoint while the affected node resyncs.

If a validator misses attestations repeatedly, lower the gas target for a short period and raise telemetry sampling to spot resource pressure.

Post upgrade health snapshot

Finality

Attestations return to a steady cadence and confirmation times trend toward the normal baseline. During busy hours, continue to track the produced to finalized ratio. Early drift there usually signals a peer or gossip issue.

Checkpointing

Milestones flow again at regular intervals. Stable spacing across traffic spikes indicates that the fix tightened internal queues and improved timeout handling.

State sync

New nodes advance without pauses. Time to sync from a snapshot becomes predictable. Compare that metric with your historical baseline to confirm the recovery.

RPC quality

Receipt and log endpoints meet your internal targets. Timeout errors and stale reads fade as caches warm. If elevated timeouts persist, validate that load balancers are routing to healthy backends only.

Consensus participation

Validator participation stays high and evenly distributed. Keep an alert for peers that miss attestations after the fork, then review bandwidth, disk latency and database size on those machines.

Developer integration notes

Event ingestion

Backfill any indexing gaps that appeared during the incident. Validate idempotency so reprocessing does not insert duplicates. Where possible, run diff checks against a small set of transactions with known results.

Reorg tolerance

Maintain your existing confirmation depth on Polygon Proof of Stake for at least one full day after the fix. Reduce the depth only if finality metrics remain stable and your error budget is intact.

Third party providers

If you failed over to external RPC during the incident, route traffic back to your primary path after health checks complete. Update analytics and quotas so monitoring reflects the switch.

Batch jobs

Resume time bounded jobs that depend on receipt availability. Start with a small batch, verify outputs, then scale to the full dataset.

Monitoring

Add dedicated panels for checkpoint spacing, state sync catch up rate and RPC percentile latencies. Tie alerts to those panels so on call engineers see actionable signals early.

Technical root cause summary

The slowdown combined RPC pressure with consensus edge cases. Under stress, certain nodes delayed gossip and proof handling, which widened the gap between produced blocks and confirmed checkpoints. Fixes in the new Bor and Heimdall builds tightened internal queues, refined timeout logic and made checkpoint scheduling more predictable. Once enough validators moved to those builds, the hard fork locked in the behavior and the network settled back into a healthy rhythm.

Bottom line

Polygon Proof of Stake is back to steady finality thanks to aligned client releases and a coordinated hard fork. Checkpoint cadence looks regular and state sync behaves as expected on fresh nodes. Operators should remain on the new Bor and Heimdall versions, watch the same core signals for a full day, and only then relax confirmation depths or reopen high volume traffic. With these steps, dApps can return to their usual operating posture with confidence.

Related posts

External links

Polygon Foundation update on successful hard fork and restored state sync

The Block coverage of Polygon hard fork addressing finality bug

- Crypto News report on Polygon finality delays and the fix

Cryptopolitan follow up on finality restored and checkpoints processing

Polygon forum note for Amoy Rio hard fork scheduling and client versions

- Erigon 3.0.17 release note adding support for Rio on Amoy

3 Comments

Pingback: Bitcoin Hashrate Surges as Bitcoin Reclaims 115K: Can Bulls Overcome Risks Toward 120K? - The Crypto Tides

Pingback: London Stock Exchange launches blockchain platform for private funds - The Crypto Tides

Pingback: France challenges EU crypto passporting under MiCA - The Crypto Tides